The deluge of images of partly clothed women – stripped by the Grok AI tool – on Elon Musk’s X has raised further questions over regulation of the technology. Is it legal to produce these images without the subject’s consent? Should they be taken off X?

In the UK alone there is some doubt over the answers to these queries. Social media regulation is a nascent area, let alone trying to control the deployment of artificial intelligence. There are laws in place to tackle the problem, such as the Online Safety Act, but the government has yet to introduce additional measures such as banning nudifying apps.

Is it illegal to post images of partly clothed people without their permission?

It is a criminal offence to share intimate images of someone without their consent under the Sexual Offences Act in England and Wales, which includes images created by AI. The law explains what constitutes an intimate image, including engaging in a “sexual act”, doing a “thing which a reasonable person would consider to be sexual”, and showing a person’s exposed genitals, buttocks or breasts.

This also includes being in underwear or wet or transparent clothing that exposes those body parts. However, according to Clare McGlynn, a professor of law at Durham University and an expert in pornography regulation, “just the prompt ‘bikini’ would not strictly be covered” by the law.

There is an offence under the Online Safety Act of posting messages containing false information with the intent of causing “non-trivial psychological or physical harm” to the recipient.

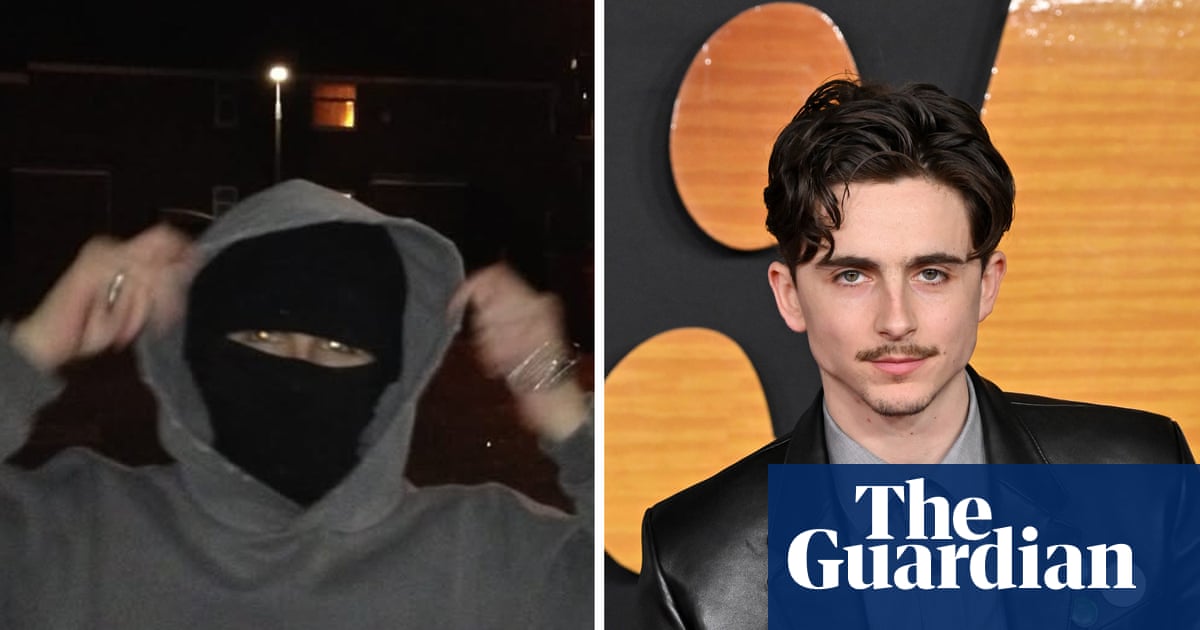

Changes to the law have had an impact. Brandon Tyler, from Braintree, Essex, was jailed for five years last year for posting in an online forum deepfake pornography of women he knew.

What about tech companies?

Under the Online Safety Act, which covers the entire UK, social media platforms have to act on intimate image abuse. They must assess the risk of this content appearing, put in place systems that reduce the likelihood of that content appearing in front of users, and take it down quickly when they become aware of it.

If the UK communications watchdog, Ofcom, thinks X has failed to meet these requirements, it can fine the platform up to 10% of its global revenue. Ofcom has made “urgent contact” with X and its parent, xAI, to find out what steps have been taken to comply with the act.

Grok, which like X is owned by Musk’s xAi, could also face censure. After reports that it has been used to produce adult pornography, Ofcom should investigate whether it has put in place adequate age-checking procedures to ensure that under-18s do not access the tool to create extreme content.

Are nudifying apps and websites illegal in the UK?

Currently, it is the sharing of non-consensual intimate images that is illegal – an offence known more colloquially as posting “revenge porn”.

The government has legislated to ban the creation of, or to request the creation of, such images under the Data (Use and Access) Act for England and Wales. However, this law is not yet in force, making it impossible to take enforcement action against any individual who creates or requests the creation of such images.

A government spokesperson said: “We refuse to tolerate this degrading and harmful behaviour, which is why we have also introduced legislation to ban their creation without consent.” It is not clear why, six months after the passing of the legislation, the government has still not brought it into force.

A further complication is the question of whether the UK authorities will have jurisdiction. An offence must have a “substantial connection with this jurisdiction”; there could be practical difficulties in prosecuting if the perpetrator was based overseas.

What if Grok has been used to produce child sexual abuse imagery?

The Internet Watch Foundation, a child safety watchdog, has reported users of a darkweb forum boasting of using Grok to create indecent images of children. Analysts at the IWF say the images they have seen constitute child sexual abuse material under UK law.

It is an offence to take, make, distribute, possess or publish an indecent photograph or pseudo-photograph – such as an AI image – of an under-18-year-old. According to Ofcom guidance for social media platforms, “content depicting a child in erotic poses without sexual activity should be considered indecent” and an image is indecent “where the inference is that the child is … associated with something sexually suggestive”.

What can I do if an image of me is manipulated on X?

Individuals’ images are protected by UK GDPR regulations. People have the right to request that manipulated images be erased by X if they have been shared on the platform. An individual’s photograph counts as personal data; when a platform is processing this data, it must do so in compliance with the law, and non-consensual manipulation of the image will breach GDPR regulations.

Individuals have the right to escalate a complaint to the Information Commissioner’s Office if X fails to remove the images, because this may be a breach of UK data protection law.

A deepfake that falsely portrays you in a manner that damages your reputation could prove grounds for a defamation claim – but this would be expensive. Alternatively, you could contact the Revenge Porn Helpline, a government-funded organisation that helps to get non-consensual intimate images removed swiftly from the internet.

.png) 1 month ago

54

1 month ago

54