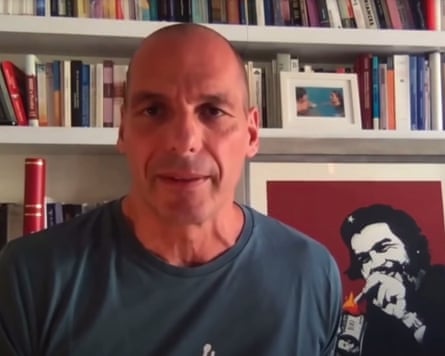

It was my blue shirt, a present from my sister-in-law, that gave it all away. It made me think of Yakov Petrovich Golyadkin, the lowly bureaucrat in Fyodor Dostoevsky’s novella The Double, a disconcerting study of the fragmented self within a vast, impersonal feudal system.

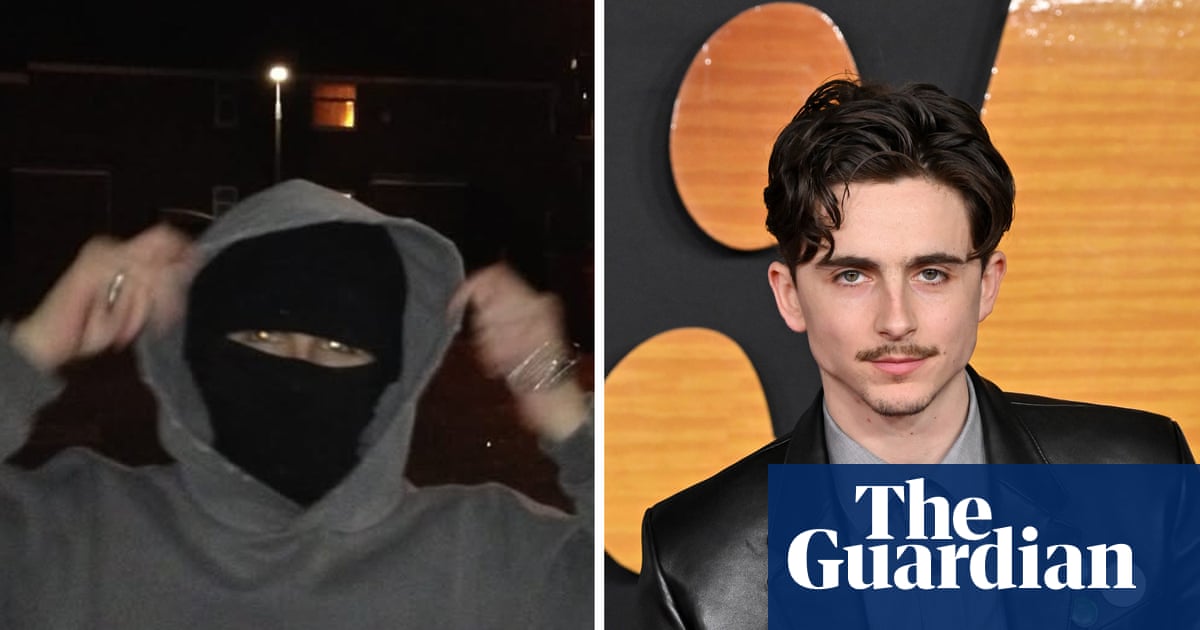

It all started with a message from an esteemed colleague congratulating me on a video talk on some geopolitical theme. When I clicked on the attached YouTube link to recall what I had said, I began to worry that my memory is not what it used to be. When did I record said video? A couple of minutes in, I knew there was something wrong. Not because I found fault in what I was saying, but because I realised that the video showed me sitting at my Athens office desk wearing that blue shirt, which had never left my island home. It was, as it turned out, a video featuring some deepfake AI doppelganger of me.

Since then, hundreds of such videos, bearing my face and synthesising my voice, have proliferated across YouTube and social media. Even this weekend, there has been another crop, depicting a deepfaked me saying fictitious things about the coup in Venezuela. They lecture, they say things I might have said, sometimes intermingled with things I would never say. They rage, they pontificate. Some are crude, others unsettlingly persuasive. Supporters send them to me, asking: “Yanis, did you really say that?” Opponents circulate them as proof of my idiocy. Far worse, some argue that my doppelgangers are more articulate and cogent than me. And so I find myself in the bizarre position of being a spectator to my own digital puppetry, a phantom in a technofeudal machine I have long argued is not merely broken, but engineered to disempower.

My initial reaction was to write to Google, Meta and the rest to demand that they take down these videos. Several forms were filled in anger before, a week or more later, some of these channels and videos were taken down, only to reappear instantly under different guises. Within days I had given up: whatever I did, however many hours I spent every day trying my luck at having big tech take down my AI doppelgangers, many more would grow back, Hydra-like.

Soon, rage gave way to contemplation. Was I not, after all, the one who argued that big tech did not merely digitise capitalism but in fact spearheaded a great transformation, turning markets into cloud fiefs and profit into cloud rents? Are my AI doppelgangers not the perfect confirmation that, in this technofeudal reality, the liberal individual is dead and buried?

Acquiescing to the partial loss of self-ownership, I sought solace in the rationalisation of these deepfakes as the ultimate act of feudal enclosure, proof that under technofeudalism we own nothing – not our labour’s data output, not our social graphs and now not even our audiovisual identity. Our new lords see us as tenants on their cloud lands, androids whose likeness they can appropriate at will to sow confusion, to muddy discourse, to drown genuine dissent in a cacophony of synthetic noise created for this purpose.

But then a sunnier thought hit me, one that harks back to ancient Athens. What if my AI doppelgangers were harbingers of isegoria (ἰσηγορία), a principle as bright, promising and absent as genuine democracy itself? When I asked several versions of AI chatbots to define it, they all dutifully misrepresented its meaning, defining isegoria as equality of speech, or the right to be heard, or the freedom to address the assembly. But that’s not what the Athenians meant with the word. In fact, to them isegoria meant the exact opposite of today’s “free speech”, which they would dismiss as the abstract right to shout into the void. To the Athenians, it meant the right to have your views judged seriously, on their merits, independently of who you are or indeed how well you phrase them.

Might AI deepfakes salvage isegoria from the clutches of our technofeudal dystopia? When we realise that it is impossible to verify who is speaking in a YouTube video, might we be forced to judge the merits of what is being said, rather than who is saying it? In the process of debasing authenticity, could big tech have inadvertently given isegoria a chance? These questions offered a glimmer of hope.

It was the hope that the spectre of democracy may still be hovering over our heads if only we can find the motivation to look up, to engage in the slow, difficult, democratic labour that the algorithmic feed was designed to obliterate: critical evaluation of views and arguments thrown at us. Alas, this hope, though tangible, is insufficient as long as our technofeudal lords retain two colossal, asymmetrical advantages.

First, they own the agora itself – the servers, the feeds, the algorithmic means of communication. They can anoint their own speech as authentic with digital seals while drowning ours in a quagmire of doubt and noise. The result? Not isegoria, but a digital divine right where truth is the patented property of power.

Second, and more cunningly, they need no deepfakes to rule. Their ideology is embedded in the machine: the power to extract surplus value from proletarians connected to the cloud through various digital devices, the logic of extracting cloud rents from vassal capitalists on their platforms, the tyranny of shareholder value, their imminent success at privatising money.

So our task is not to beg these lords for verification. Our task is political. We must socialise cloud capital, the all-powerful new force transforming society and destroying everything that makes humanism imaginable.

Until then, let our digital doppelgangers speak. Perhaps they will so saturate the spectacle that we finally stop listening for our voice and start judging the arguments on their own terms. This is perhaps the most paradoxical shard of hope in a hall of mirrors. But in this carnival, we grasp at every fragment we can.

-

Yanis Varoufakis is an economist, politician and author. His latest book is Raise Your Soul! A Personal History of Resistance

.png) 1 month ago

30

1 month ago

30